Analog AI Inference

20 US Patents Issued & Proof of Silicon 2Q-2025

Introducing Ai Linear’s Patented Analog Inference Engines: Key Benefits

- Significantly Lower Development & Manufacturing Costs: Ai Linear’s analog inference engines are 2-3 orders of magnitude cheaper to develop & produce than digital AI engines based on FinFET technology. Ai Linear’s Analog Ai Inference Technology leverage mainstream, low-cost lagging-edge digital CMOS processes. Also, the design eliminates passive resistors and capacitors, lowering production costs and simplifying the design

- Significantly Lower Energy Consumption: Analog MAC operations consume drastically less power (2-3 orders of magnitude) than their digital FinFET counterparts, ideal for edge devices and energy-sensitive applications.

- Parallel Computation & Faster Dynamic Response: Ai Linear’s Analog Ai circuits naturally perform operations in parallel, enabling high throughput and efficient Small-to-Mid-Scale computation. Ai Linear’s current-mode signal processing with small voltage swings ensures rapid response times.

- Compute-in-Memory & Power Efficiency: Integrating memory and MAC cells side by side reduces dynamic power consumption, improving overall energy efficiency. Ai Linear topologies is a natural fit for EEPROM or resistive RAM programming options, adding versatility to inference engine configurations. Moreover, it support operation at low supply voltages (approximately VGS+2VDS) enhancing energy efficiency.

- Compact Design: Asynchronous fully connected analog MAC eliminates the need for external memory and costly A/D or D/A conversions in hidden layers, reducing hardware size. Also, Ai Linear’s Analog MAC implementations use fewer transistors and less chip area, allowing for denser hardware integration compared to digital systems.

- Low Latency & Option for Clockless Operation: Ai Linear’s Ai engine can operate asynchronously without clock cycles, improving efficiency and speeding up computations. Real-time signal processing minimizes latency, accelerating inference tasks with option for zero-dependence on the Cloud/Internet.

- Natural Data Representation: Analog engines natively process continuous activation signals from sensors, making them ideal for real-world data such as audio and visual inputs.

- Resilience to Variations: Patented circuits improve resistance to temperature changes, power supply fluctuations, and manufacturing process variability. Additionally, the architecture tolerates noise and variability in computations, maintaining robust performance even under imperfect conditions.

- Improved Linearity: Ai Linear’s patented scalar MAC is inherently more accurate by design. Moreover, the MAC topology is suited for analog calibration or trimming of weights and biases. which can enhance linearity in computations.

- Optimized for Batch Normalization: Inherently efficient for operations like batch normalization and scalar multiplication, streamlining neural network inference.

- Programmable Speed: Allows performance tuning based on steady-state current IDD for adaptable speed and power trade-offs.

- Graceful Accuracy Degradation: System accuracy degrades smoothly with increasing activation signal frequency, even without sample-and-hold (S/H) mechanisms.

This combination of efficiency, compactness, and versatility positions Ai Linear’s analog computation technology as a transformative approach for low-cost, low-power, high-speed AI inference with Zero-Internet and/or Zero-Cloud.

LLM & Generative AI Solutions For the Ultra-Edge Applications utilizing Ai Linear Analog Ai Inference Technology:

Offering 2 to 3 orders of magnitude lower development and manufacturing costs and 2 to 3 orders of magnitude lower power consumption (compared to Ai on FinFET), Ai Linear’s LLM & Generative AI solutions provide ultra-low power, near-zero latency, local AI processing with built-in privacy and inherent cybersecurity. These small-to-mid scalable solutions support multi-modal AI (e.g., sound, image) in edge-based devices like wearables, smartwatches, and smart glasses.

*Edge-Based Applications Examples:

- Virtual Assistant: Supports health monitoring, personalized daily recommendations, and multi-modal interactions (voice, touch, gesture).

- Recommendation Engines: Delivers personalized content with minimal latency.

- Sentiment Analysis: Analyzes non-verbal cues to gauge sentiment and improve engagement.

- Content Generation: Produces human-like text, images, and other media.

- Translation & Localization: Provides context-aware translation across languages via handheld or wearable devices.

*Key Use Cases for Ai Linear’s Analog AI Inference Technology:

- Edge AI Devices: Ai Linear’s Analog MAC units enable highly energy-efficient AI processing for edge devices like smartphones, wearables, and IoT sensors.

- Analog-In-Memory Computing: By integrating Ai Linear’s Analog AI Inference MAC with analog memory (e.g., EEPROM, resistive RAM), this technology enables efficient in-memory neural network inference, reducing data movement. Ai Linear holds patents (pending) for applications like Ai-In-Pixel, sound and vibration monitoring, and anomaly detection (for industrial, Security, Retail, and consumer markets).

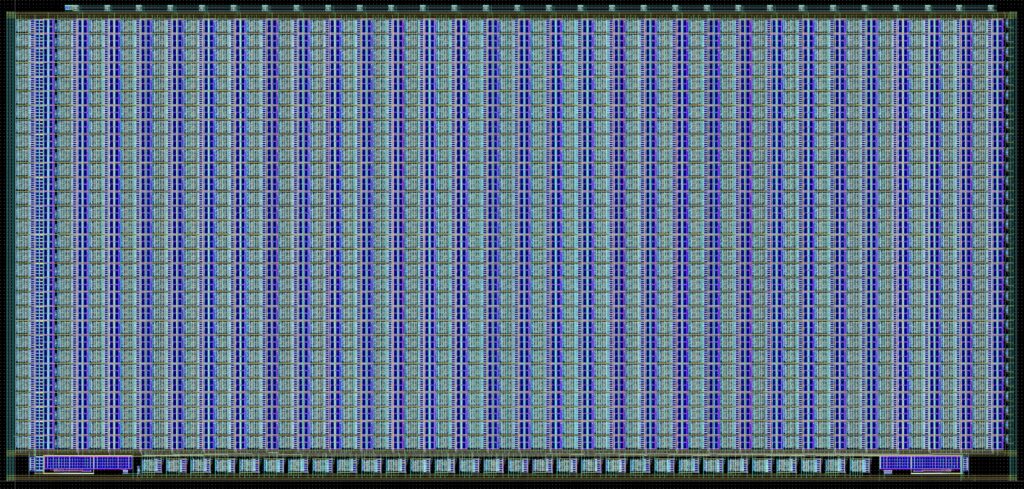

BNN Analog AI Inference IC: Traits (Silicon 2Q25)

- Datasheet Work in Progress (Testing forthcoming)

- Patented

- Tiny & Low-Power Binary-Neural-Network (BNN) Compute-in-memory (CIM) Multiply-Accumulate (MAC)

- Asynchronous simultaneous MAC operation

- A Weights-In-Memory Signals 80×80 Matrix (W), multiplied by Activation Signal 80×1 Matrix (S), added to a Bias Signal 80×1 Matrix (B) to generate a final Output [W]×[S] + [B] MAC Signals 80×1 Matrix

- Test-chip includes the MAC units, CIM, and serial SPI I/O ports for W, S, B, and MAC output signals

NN Analog AI Inference IC: Traits (Silicon 2Q25)

- Datasheet Work in Progress (Testing forthcoming)

- Patented

- Tiny & Low-Power Binary-Neural-Network (BNN) Compute-in-memory (CIM) Multiply-Accumulate (MAC)

- Asynchronous simultaneous MAC operation

- A Weights-In-Memory Signals 30×30 Matrix (W), multiplied by Activation Signal 30×1 Matrix (S), added to a Bias Signal 30×1 Matrix (B) to generate a final Output [W]×[S] + [B] MAC Signals 30×1 Matrix

- Test-chip includes the MAC units, CIM, and serial SPI I/O ports for W, S, B, and MAC output signals, wherein each of W,S, and B signals have 6-bits of resolution